Audio Analysis

Understanding and processing audio data to create responsive, synchronized visualizations

The Foundation of Audio Reactive Visuals

Audio analysis is the cornerstone of creating effective audio reactive visualizations. By extracting meaningful data from audio signals, we can create visual elements that respond organically to music, enhancing the connection between what audiences hear and see.

Effective audio analysis involves more than simply measuring volume. By breaking down audio into its component parts—frequency bands, rhythmic elements, tonal characteristics—we can create visualizations that respond to the nuanced structure of music, highlighting different instruments, beats, and emotional qualities.

Advanced Audio Features with Meyda

Feature Descriptions

- Spectral Centroid: Represents the "center of mass" of the spectrum. Higher values indicate "brighter" sounds with more high frequencies.

- Spectral Flatness: Measures how noise-like vs. tone-like a sound is. Higher values indicate more noise-like sounds.

- Perceptual Spread: Indicates how "spread out" the spectrum is around its perceptual centroid.

- Spectral Rolloff: Frequency below which 85% of the spectrum's energy is contained. Higher values indicate more high-frequency content.

Radar Visualization of Audio Features

This radar/polar visualization displays multiple audio features simultaneously, inspired by the Meyda.js.org homepage. The distance from the center represents the intensity of each audio feature.

Visualized Features

- Energy: Overall signal strength

- Spectral Centroid: Brightness of sound

- Spectral Flatness: Tone vs. noise balance

- Perceptual Spread: Spectrum width

- Spectral Rolloff: High frequency content

- ZCR: Zero-crossing rate

Key Audio Analysis Techniques

Several fundamental techniques form the basis of audio analysis for visualizations:

Frequency Analysis

Breaking down audio into its component frequencies using Fast Fourier Transform (FFT). This technique converts time-domain audio data into frequency-domain data, revealing the distribution of energy across the frequency spectrum. This allows visualizations to respond differently to bass, midrange, and treble elements.

Beat Detection

Identifying rhythmic pulses and beats in audio signals. Beat detection algorithms analyze audio for sudden increases in energy or specific frequency patterns that indicate beats. This allows visualizations to synchronize with the rhythm of the music.

Amplitude Tracking

Measuring the overall volume and energy of the audio signal over time. While simpler than frequency analysis, amplitude tracking is essential for creating visualizations that respond to the dynamic range of music, growing more active during louder passages.

Onset Detection

Identifying the beginning of new sounds or notes in the audio. Onset detection algorithms look for rapid changes in the audio signal that indicate new events, such as a drum hit or a plucked string, creating visual events that coincide with musical events.

Implementation with Web Audio API

The Web Audio API provides powerful tools for audio analysis in the browser, which can be integrated with Three.js for creating audio-reactive visualizations:

Setting Up Audio Analysis

// Create audio context

const audioContext = new (window.AudioContext || window.webkitAudioContext)();

// Create analyzer node

const analyser = audioContext.createAnalyser();

analyser.fftSize = 2048; // Must be a power of 2

analyser.smoothingTimeConstant = 0.8; // Value between 0-1, higher = smoother

// Set up audio source (from file, microphone, or stream)

let audioSource;

// Example: Load audio file

async function loadAudio(url) {

const response = await fetch(url);

const arrayBuffer = await response.arrayBuffer();

const audioBuffer = await audioContext.decodeAudioData(arrayBuffer);

// Create audio source from buffer

audioSource = audioContext.createBufferSource();

audioSource.buffer = audioBuffer;

// Connect source to analyzer and output

audioSource.connect(analyser);

analyser.connect(audioContext.destination);

// Start playback

audioSource.start(0);

}

// Example: Use microphone input

async function useMicrophone() {

try {

const stream = await navigator.mediaDevices.getUserMedia({ audio: true });

audioSource = audioContext.createMediaStreamSource(stream);

audioSource.connect(analyser);

// Note: Don't connect to destination to avoid feedback

} catch (err) {

console.error('Error accessing microphone:', err);

}

}

// Create data arrays for analysis

const frequencyData = new Uint8Array(analyser.frequencyBinCount);

const timeData = new Uint8Array(analyser.frequencyBinCount);

// Animation loop for continuous analysis

function analyze() {

requestAnimationFrame(analyze);

// Get frequency data (0-255 values)

analyser.getByteFrequencyData(frequencyData);

// Get waveform data (0-255 values)

analyser.getByteTimeDomainData(timeData);

// Now use this data to update your visualizations

updateVisualizations(frequencyData, timeData);

}This setup provides the foundation for audio analysis in the browser. The key components include:

- Audio Context: The core object that handles all audio processing.

- Analyzer Node: Provides real-time frequency and time-domain analysis.

- FFT Size: Determines the resolution of frequency analysis (larger = more detailed).

- Smoothing: Controls how rapidly the analysis responds to changes in the audio.

Frequency Band Separation

One of the most powerful techniques for creating nuanced visualizations is separating audio into frequency bands:

// Define frequency bands (in Hz)

const bands = {

bass: { min: 20, max: 250 },

lowMid: { min: 250, max: 500 },

mid: { min: 500, max: 2000 },

highMid: { min: 2000, max: 4000 },

treble: { min: 4000, max: 20000 }

};

// Calculate average amplitude for a frequency band

function getFrequencyBandValue(frequencyData, band) {

const nyquist = audioContext.sampleRate / 2;

const lowIndex = Math.round(band.min / nyquist * frequencyData.length);

const highIndex = Math.round(band.max / nyquist * frequencyData.length);

let total = 0;

let count = 0;

for (let i = lowIndex; i <= highIndex; i++) {

total += frequencyData[i];

count++;

}

return total / count / 255; // Normalize to 0-1

}

// Usage in animation loop

function updateVisualizations(frequencyData) {

const bassValue = getFrequencyBandValue(frequencyData, bands.bass);

const midValue = getFrequencyBandValue(frequencyData, bands.mid);

const trebleValue = getFrequencyBandValue(frequencyData, bands.treble);

// Now use these values to drive different aspects of your visualization

// For example:

particleSystem.scale.set(1 + bassValue * 2, 1 + midValue * 2, 1 + trebleValue * 2);

}By separating audio into frequency bands, you can create visualizations where:

- Bass frequencies control the size or position of elements

- Mid-range frequencies affect color or opacity

- High frequencies drive particle emission or detail elements

This creates a more nuanced connection between the audio and visual elements, making the visualization feel more musical and responsive.

Beat Detection Algorithms

Accurate beat detection is crucial for creating visualizations that feel synchronized with music:

// Simple energy-based beat detection

class BeatDetector {

constructor(sensitivity = 1.5, decayRate = 0.95, minThreshold = 0.3) {

this.sensitivity = sensitivity;

this.decayRate = decayRate;

this.minThreshold = minThreshold;

this.threshold = this.minThreshold;

this.lastBeatTime = 0;

this.energyHistory = [];

}

detectBeat(frequencyData) {

// Calculate current energy (focus on bass frequencies for beat detection)

let energy = 0;

const bassRange = Math.floor(frequencyData.length * 0.1); // Use first 10% of frequencies

for (let i = 0; i < bassRange; i++) {

energy += frequencyData[i] * frequencyData[i]; // Square for more pronounced peaks

}

energy = Math.sqrt(energy / bassRange) / 255; // Normalize to 0-1

// Store energy history

this.energyHistory.push(energy);

if (this.energyHistory.length > 30) {

this.energyHistory.shift();

}

// Calculate local average

const avgEnergy = this.energyHistory.reduce((sum, e) => sum + e, 0) / this.energyHistory.length;

// Update threshold with decay

this.threshold = Math.max(avgEnergy * this.sensitivity, this.minThreshold);

this.threshold *= this.decayRate;

// Check if current energy exceeds threshold

const isBeat = energy > this.threshold;

// Prevent beats too close together (debounce)

const now = performance.now();

const timeSinceLastBeat = now - this.lastBeatTime;

if (isBeat && timeSinceLastBeat > 100) { // Minimum 100ms between beats

this.lastBeatTime = now;

return true;

}

return false;

}

}

// Usage

const beatDetector = new BeatDetector();

function animate() {

requestAnimationFrame(animate);

analyser.getByteFrequencyData(frequencyData);

if (beatDetector.detectBeat(frequencyData)) {

// Trigger beat-reactive visual effects

triggerBeatVisualization();

}

// Continue with regular updates

updateVisualizations(frequencyData);

}This energy-based beat detection algorithm works well for many types of music, especially those with prominent beats. For more complex music or more accurate detection, consider using the web-audio-beat-detector library, which implements more sophisticated algorithms.

Smoothing and Interpolation

Raw audio data can be noisy and erratic. Applying smoothing and interpolation creates more fluid, visually pleasing animations:

// Exponential smoothing for values

class SmoothValue {

constructor(initialValue = 0, smoothingFactor = 0.3) {

this.value = initialValue;

this.target = initialValue;

this.smoothingFactor = smoothingFactor; // 0-1, higher = faster response

}

update() {

this.value += (this.target - this.value) * this.smoothingFactor;

return this.value;

}

setTarget(newTarget) {

this.target = newTarget;

}

}

// Usage

const bassSmoother = new SmoothValue(0, 0.2); // Slow response for bass

const trebleSmoother = new SmoothValue(0, 0.6); // Fast response for treble

function updateVisualizations(frequencyData) {

// Get raw values

const bassValue = getFrequencyBandValue(frequencyData, bands.bass);

const trebleValue = getFrequencyBandValue(frequencyData, bands.treble);

// Set as targets for smoothers

bassSmoother.setTarget(bassValue);

trebleSmoother.setTarget(trebleValue);

// Get smoothed values

const smoothBass = bassSmoother.update();

const smoothTreble = trebleSmoother.update();

// Use smoothed values for visualization

updateVisualsWithSmoothedValues(smoothBass, smoothTreble);

}Different smoothing factors can be applied to different frequency bands to create more natural-feeling animations. For example:

- Slower smoothing for bass frequencies creates a weighty, substantial feel

- Faster smoothing for high frequencies creates a more immediate, sparkly response

- Medium smoothing for mid-range creates a balanced, musical feel

Mapping Audio to Visual Parameters

The art of audio visualization lies in creating meaningful mappings between audio characteristics and visual parameters:

| Audio Parameter | Visual Parameter | Effect |

|---|---|---|

| Bass Amplitude | Scale / Size | Creates a sense of power and weight |

| Mid Frequencies | Color Hue | Reflects tonal character of the music |

| High Frequencies | Brightness / Emission | Creates sparkle and detail |

| Beat Detection | Sudden Movements / Bursts | Creates rhythmic visual punctuation |

| Spectral Centroid | Vertical Position | Higher sounds = higher position |

| Spectral Spread | Particle Dispersion | Wider spectrum = more scattered particles |

Effective mappings create a sense of synesthesia—a natural-feeling connection between what is heard and what is seen. The best mappings often follow physical intuition: louder sounds are bigger, higher pitches are higher in space, more complex sounds create more complex visuals.

Real-time vs. Pre-analyzed Audio

There are two main approaches to audio analysis for visualizations:

Real-time Analysis

Advantages:

- Works with live input (microphones, line-in)

- Responsive to any audio source

- No preprocessing required

- Ideal for live performances

Challenges:

- Limited processing time per frame

- No future knowledge of the audio

- More prone to analysis errors

- Higher CPU usage

Pre-analyzed Audio

Advantages:

- More accurate analysis possible

- Can use more complex algorithms

- Future knowledge allows better synchronization

- Lower CPU usage during playback

Challenges:

- Requires preprocessing step

- Only works with known audio files

- Not suitable for live input

- Requires storage for analysis data

For live performances with unknown music, real-time analysis is essential. For prepared performances or installations with known audio, pre-analysis can provide more accurate and sophisticated visualizations.

Related Topics

Automating Complex Audio Analysis with AI

While manual audio analysis gives you precise control, implementing sophisticated analysis algorithms requires deep technical expertise and significant development time.

Compeller.ai automates the complex audio analysis process, instantly detecting beats, identifying frequency patterns, and creating professional-quality audio-reactive visualizations—all with no coding required.

- Instant beat detection and frequency analysis

- Advanced pattern recognition in audio files

- Real-time visualization rendering optimized for performance

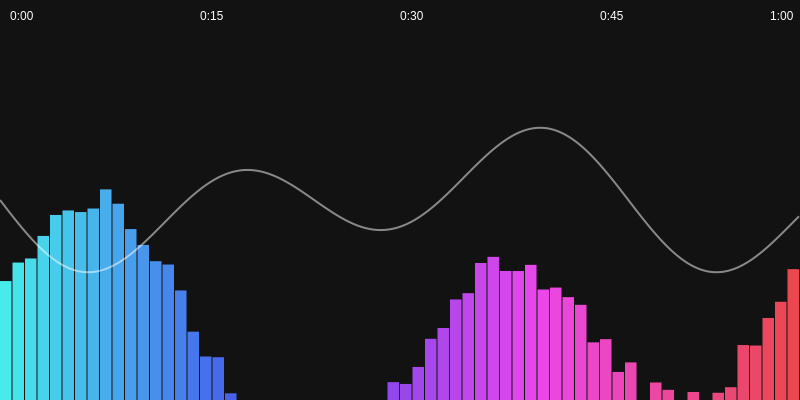

Compeller.ai Visualization

Compeller.ai Visualization